I was working with an evaluator the other day who needed an example of how to use the Scrutinizer API to generate data and export it to a CSV file. Honestly, I knew how the API functioned, but didn’t have much experience using it. With that in mind and being the daredevil that I am, I figured this evaluator’s request would be the perfect opportunity to roll up my sleeves and get my hands dirty.

Normally, the tried and true link that we send to people interested in learning more about using Scrutinizer’s API functionality is the post my co-worker Jennifer Maher created. Her information gave me a better understanding of how the API engine dealt with things like authentication and how the JSON object works, but my goal was to provide a working example. This meant that I needed to dig deeper into the process and learn more about how Scrutinizer uses JSON to package the data for export.

Normally, the tried and true link that we send to people interested in learning more about using Scrutinizer’s API functionality is the post my co-worker Jennifer Maher created. Her information gave me a better understanding of how the API engine dealt with things like authentication and how the JSON object works, but my goal was to provide a working example. This meant that I needed to dig deeper into the process and learn more about how Scrutinizer uses JSON to package the data for export.

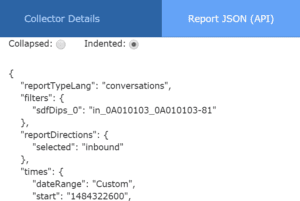

Seeing the JSON Object

One of the newer features in Scrutinizer is the Report Details option. It’s a blue button located on the left-hand side of the screen in the reports tool box. This button provides detailed information about the report you are working with. Specifically, it provides you with a standardized JSON object that can be easily called from other applications and scripts. NICE! I could use this information and the details in the Flow View report to help identify the flow element names that I want to call via my API-to-CSV script.

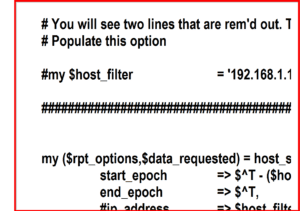

I felt like I was making progress, but to be completely honest, I was still a bit confused. Although I could call this JSON object via a HTTP request, I didn’t yet know how this data would be used in my own script. The good news is that my buddies over in development had already created a few example scripts for end users to experiment with. These scripts are located in the /scrutinizer/files/api_examples/ directory of your install. Now it was time to write this thing.

I felt like I was making progress, but to be completely honest, I was still a bit confused. Although I could call this JSON object via a HTTP request, I didn’t yet know how this data would be used in my own script. The good news is that my buddies over in development had already created a few example scripts for end users to experiment with. These scripts are located in the /scrutinizer/files/api_examples/ directory of your install. Now it was time to write this thing.

Highlights from my script

After a few hours and a bunch of questions to development, I started to get my head around the process. (I also quickly remembered why I was no longer a coder.) Although I have included my fully commented PERL script example with this post, I wanted to highlight a few of the subroutines.

sub format_report_data

In this routine, we are formatting the data we get from the search request. In this example, we have five variables.

$src_ip $dst_ip $bytes, $first_flow_ts, $last_flow_ts

Then we populate the hash with the elements:

## Here is where we populate the hash with the flow elements ##

$row->[$indices{'sourceipaddress'}]->{'label'}

$row->[$indices{'destinationipaddress'}]->{'label'},

$row->[$indices{'sum_octetdeltacount'}]->{'label'},

# Here is where we populate the hash with an EPOCH timestamp.

$row->[$indices{'first_flow_epoch'}]->{'label'},

$row->[$indices{'last_flow_epoch'}]->{'label'},

#This is an example of how you can format it in local readable time

#scalar(localtime($row->[$indices{'first_flow_epoch'}]->{'label'})),

#scalar(localtime($row->[$indices{'last_flow_epoch'}]->{'label'})),

This line is what constructs the output data. As you can see, I used commas as delimiters.

$formatted_data.= qq{$first_flow_ts,$last_flow_ts,$src_ip, $dst_ip, $bytes\n};

sub get_scrutinizer-data

This section makes the request to the Scrutinizer server. I didn’t make any changes to this section of the code as it is pretty standard.

sub host_search_request

This section builds the JSON request and returns the object’s data. Here is where you can add the various JSON objects discussed above.

Exporting the data

Then I needed to export the data to a CSV file. My example script prints the data to the screen. Since this was just a Proof of Concept (POC) example, I decided to save some time and pipe the output to a CSV with the below command. I also added the global data variables at the end of the name that make it unique.

perl api_report_example_test.pl > "JIMMYDCSV_$(data '+%y-%m-%d').csv"

Piping it to a file is a simple way to export the data when it’s created. In my example, you would add this as a CRON task and automate the process. In real life, you might want to add a routine to your script that outputs to a file directly to a CSV and skips the piping. I would also add a complete timestamp to the file name. In the future, to make the script smarter, I would also add code to send a syslog message back to Scrutinizer letting you know the stop and start of the process.

The API gives you the flexibility needed for integration into various applications. This allows flow data to be available for billing (accounting, legal, medical, ISPs…), security, auditing, and any other organizational needs. If you have any questions, make sure to contact our support team to help with your NetFlow API Integration.