UPDATE: You can download our Kibana integration for Scrutinizer here: https://files.plixer.com/resources/configs/scrutinizer-elk.zip

Our support team recently received a request for Elasticsearch NetFlow Integration. For those of you new to Elasticsearch, it is basically a lower cost alternative to Splunk. Actually, Elasticsearch, Logstash, and Kibana (ELK) are usually all combined to deliver a top notch log storage and mining solution. Companies such as ebay, Linkedin and Wikipedia are using it. The three parts are explained as follows:

- Elasticsearch: a distributed, open source search and analytics engine, designed for horizontal scalability, reliability, and easy management. It combines the speed of search with the power of analytics via a sophisticated, developer-friendly query language covering structured, unstructured, and time-series data.

- Logstash: a flexible, open source data collection, enrichment, and transportation pipeline. With connectors to common infrastructure for easy integration, Logstash is designed to efficiently process a growing list of log, event, and unstructured data sources for distribution into a variety of outputs, including Elasticsearch.

- Kibana: an open source data visualization platform that allows you to interact with your data through stunning, powerful graphics. From histograms to geomaps, Kibana brings your data to life with visuals that can be combined into custom dashboards that help you share insights from your data far and wide.

The simpliest integration is what I will focus on today. It involves a URL that we can pass to Kibana with certain variables. The URL looks like this:

[kibanaserverurl]/#/discover?_g=(refreshInterval:(display:Off,pause:!f,section:0,value:0),time:(from:'%zs',mode:absolute,to:'%ze'))&_a=(columns:!(_source),index:'logstash-*',interval:auto,query:(query_string:(analyze_wildcard:!t,query:'%i')),sort:!('@timestamp',desc))

The values that we need to insert into the URL below are as defined below:

%zs = 2015-10-05T12:30:11.715Z format. This is really 8:30am our time. Our epoch will need to be converted to Zulu Time

%ze = 2015-10-05T12:45:11.715Z format. This is really 8:45am our time. Our epoch will need to be converted to Zulu Time. After further research, we learned that they support the ISO8601 time format.

%i = 10.1.5.1. the host we want to search. This is already supported.

You can find more details on the Elasticsearch API to execute search queries on their web site.

To launch the ELK or Kibana interface from Scrutinizer which is our NetFlow Analyzer we had to place the above URL below in a file called applications.cfg which is located in the /files/ directory of the Scrutinizer installation:

Elastic, http://<elastichost>/#/discover?_g=(refreshInterval%3A(display%3AOff%2Cpause%3A!f%2Csection%3A0%2Cvalue%3A0)%2Ctime%3A(from%3A%27%zs%27%2Cmode%3Aabsolute%2Cto%3A%27%ze%27))&_a=(columns%3A!(_source)%2Cindex%3A%27logstash-*%27%2Cinterval%3Aauto%2Cquery%3A(query_string%3A(analyze_wildcard%3A!t%2Cquery%3A%27%i%27))%2Csort%3A!(%27%40timestamp%27%2Cdesc)), Elastic

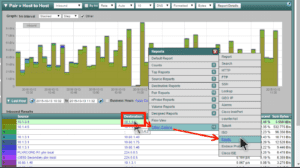

Notice above that the URL is preceded by [Elastic ,] and appended with [, Elastic]. Those values declare the name of the option in the menu as it appears in the image below when you click on an IP address in Scrutinizer. You could have just as easily named it ‘ELK’.

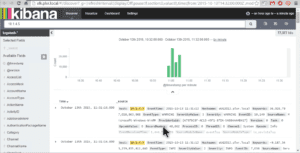

When you click on the ‘Elastic’ option in the menu above, it will launch a new web browser which brings up the Kabana interface. In the URL, Scrutinizer passes the IP address that was clicked on (10.1.4.5) as well as the time frame. Notice the results of this ELK NetFlow support as shown below:

We will simplify the above integrationprocess in a future release but, for now this is a simple and quick integration process. We also setup Splunk NetFlow support as well.

With the above Elasticsearch NetFlow Integration from Scrutinizer to ELK complete, we now need to get from ELK to the NetFlow data in Scrutinizer using a similar process. After a bit of research, we learned that this process will be a little more work. We should have it working in a few days. First, I’m off to a customer site. In the mean time, call our support if you need help setting this up.